Thesis Type |

|

Student |

Sharma, Anmol |

Status |

Finished |

Supervisor(s) |

Stefan Decker |

Advisor(s) |

Sulayman K. Sowe Soo-Yon Kim Fidan Limani Sayed Hoseini Zeyd Boukhers |

Contact |

sowe@dbis.rwth-aachen.de kim@dbis.rwth-aachen.de flimani@zbw-workspace.eu sayed.hoseini@hs-niederrhein.de zeyd.boukhers@fit.fraunhofer.de |

FAIR data is one of the most important pillars of research data management. It refers to data or a dataset that is Findable, Accessible, Interoperable, and Reusable. The fundamental challenge for researchers is to assess the FAIRness of their research data using various platforms or tools. Most of these tools are implemented based on established assessment frameworks such as the RDA FAIR Data Maturity Indicators, which is then implemented either as a guideline /or as a manual checklist of a question-answer system that the data owner fills in, or as an automatic assessment approach such as that provided by the F-UJI Automated FAIR Data Assessment Tool.

However, FAIR data assessment is undergoing a transformative paradigm shift with the advent of Large Language Models (LLMs). Leveraging advanced natural language processing capabilities, LLMs have the potential to enhance information retrieval, knowledge synthesis, and hypothesis generation.

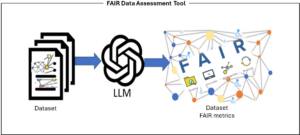

The goal of the thesis, as depicted in the figure below, is to leverage the benefits inherent in LLMs to develop a tool for FAIR data assessment.

The dataset for training the LLM will be selected from a data portal such as Kaggle Data Science (https://www.kaggle.com/datasets) or HuggingFace (https://huggingface.co/datasets ) or FAIRsharing.org (https://fairsharing.org/FAIRsharing.cc3QN9 )

TASKS:

- Review existing literature on FAIR data assessment tools and LLMs

- Find relevant datasets to compare classical FAIR data Assessment methods with LLM-based methods

- Implement an LLM-based FAIR data assessment tool

- Conduct evaluation between “classical” FAIR data Assessment methods vs LLM-based (performance and usability of the LLM FAIR data assessment tool)

- Utilize the LLM to generate recommendations to improve the FAIRness of particular datasets

Related project

NFDI4DS: https://www.nfdi4datascience.de/

References

- Raza, Shaina et al. “FAIR Enough: How Can We Develop and Assess a FAIR-Compliant Dataset for Large Language Models’ Training?” (2024). https://api.semanticscholar.org/CorpusID:267069102

- Wilkinson, M., Dumontier, M., Aalbersberg, I. et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 3, 160018 (2016). https://doi.org/10.1038/sdata.2016.18

- FAIRification Process: https://www.go-fair.org/fair-principles/fairification-process/

- Ahmad, Raia Abu et al. (2024). Toward FAIR Semantic Publishing of Research Dataset Metadata in the Open Research Knowledge Graph. https://api.semanticscholar.org/CorpusID:269137144

- FAIR for AI: An Interdisciplinary and International Community Building Perspective – https://www.nature.com/articles/s41597-023-02298-6

- FAIR AI models in high energy physics – https://iopscience.iop.org/article/10.1088/2632-2153/ad12e3/meta

- Soiland-Reyes, S., Sefton, P., Leo, S., Castro, L. J., Weiland, C., & Van De Sompel, H. (2024). Practical webby FDOs with RO-Crate and FAIR Signposting: Experiences and lessons learned. International FAIR Digital Objects Implementation Summit 2024.https://pure.manchester.ac.uk/ws/portalfiles/portal/300255290/TIB_FDO2024_signposting_ro_crate-11.pdf

Example of existing FIAR data assessment tools:

- F-UJI, EOSC FAIR EVA: https://fair.csic.es/en

- AutoFAIR; FAIR Shake: https://fairshake.cloud/

- FAIR-Checker: https://fair-checker.france-bioinformatique.fr/

- The Evaluator: https://fairsharing.github.io/FAIR-Evaluator-FrontEnd/

- DANS Self-Assessment Tool: https://satifyd.dans.knaw.nl/

- EUDAT Fair Data Checklist: https://zenodo.org/records/1065991

- https://github.com/FAIRMetrics/Metrics

- Natural language Processing (NLP)

- Interest in working with Large Language Models

- Some Knowledge about various LLMs (pre-)training, Tokenization, and fine-tuning techniques